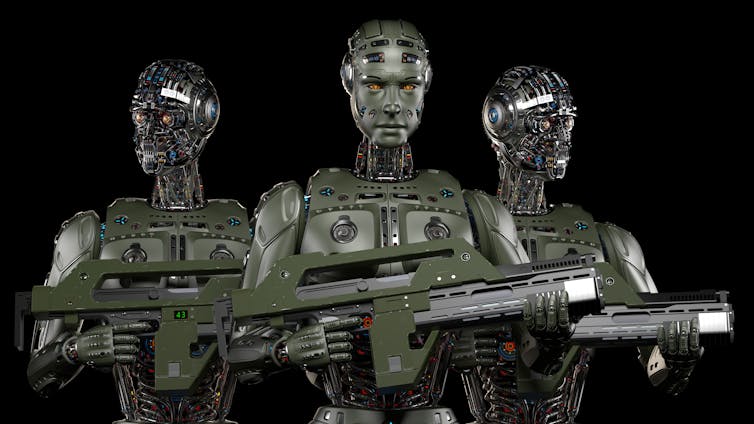

From self-driving cars, to digital assistants, artificial intelligence (AI) is fast becoming an integral technology in our lives today. But this same technology that can help to make our day-to-day life easier is also being incorporated into weapons for use in combat situations.

Weaponised AI features heavily in the security strategies of the US, China and Russia. And some existing weapons systems already include autonomous capabilities based on AI, developing weaponised AI further means machines could potentially make decisions to harm and kill people based on their programming, without human intervention.

From our partners:

Countries that back the use of AI weapons claim it allows them to respond to emerging threats at greater than human speed. They also say it reduces the risk to military personnel and increases the ability to hit targets with greater precision. But outsourcing use-of-force decisions to machines violates human dignity. And it’s also incompatible with international law which requires human judgement in context.

Indeed, the role that humans should play in use of force decisions has been an increased area of focus in many United Nations (UN) meetings. And at a recent UN meeting, states agreed that it’s unacceptable on ethical and legal grounds to delegate use-of-force decisions to machines – “without any human control whatsoever”.

But while this may sound like good news, there continues to be major differences in how states define “human control”.

The problem

A closer look at different governmental statements shows that many states, including key developers of weaponised AI such as the US and UK, favour what’s known as a distributed perspective of human control.

This is where human control is present across the entire life-cycle of the weapons – from development, to use and at various stages of military decision-making. But while this may sound sensible, it actually leaves a lot of room for human control to become more nebulous.

Taken at face value, recognising human control as a process rather than a single decision is correct and important. And it reflects operational reality, in that there are multiple stages to how modern militaries plan attacks involving a human chain of command. But there are drawbacks to relying upon this understanding.

It can, for example, uphold the illusion of human control when in reality it has been relegated to situations where it does not matter as much. This risks making the overall quality of human control in warfare dubious. In that it is exerted everywhere generally and nowhere specifically.

This could allow states to focus more on early stages of research and development and less so on specific decisions around the use of force on the battlefield, such as distinguishing between civilians and combatants or assessing a proportional military response – which are crucial to comply with international law.

And while it may sound reassuring to have human control from the research and development stage, this also glosses over significant technological difficulties. Namely, that current algorithms are not predictable and understandable to human operators. So even if human operators supervise systems applying such algorithms when using force, they are not able to understand how these systems have calculated targets.

Life and death with data

Unlike machines, human decisions to use force cannot be pre-programmed. Indeed, the brunt of international humanitarian law obligations apply to actual, specific battlefield decisions to use force, rather than to earlier stages of a weapons system’s lifecycle. This was highlighted by a member of the Brazilian delegation at the recent UN meetings.

Adhering to international humanitarian law in the fast-changing context of warfare also requires constant human assessment. This cannot simply be done with an algorithm. This is especially the case in urban warfare, where civilians and combatants are in the same space.

Ultimately, to have machines that are able to make the decision to end people’s lives violates human dignity by reducing people to objects. As Peter Asaro, a philosopher of science and technology, argues: “Distinguishing a ‘target’ in a field of data is not recognising a human person as someone with rights.” Indeed, a machine cannot be programmed to appreciate the value of human life.

Many states have argued for new legal rules to ensure human control over autonomous weapons systems. But a few others, including the US, hold that existing international law is sufficient. Though the uncertainty surrounding what meaningful human control actually is shows that more clarity in the form of new international law is needed.

This must focus on the essential qualities that make human control meaningful, while retaining human judgement in the context of specific use-of-force decisions. Without it, there’s a risk of undercutting the value of new international law aimed at curbing weaponised AI.

This is important because without specific regulations, current practices in military decision-making will continue to shape what’s considered “appropriate” – without being critically discussed.![]()

Ingvild Bode, Associate Professor of International Relations, University of Southern Denmark

This article is republished from The Conversation under a Creative Commons license.

For enquiries, product placements, sponsorships, and collaborations, connect with us at [email protected]. We'd love to hear from you!

Our humans need coffee too! Your support is highly appreciated, thank you!