It can be hard to tell whether a picture is real. Consider, as the participants in our recent research did, these two images and see whether you think neither, either or both of them has been doctored.

You might have based your assessment of the images on the visual information alone, or perhaps factored in your evaluation of how reputable the source is, or the number of people who liked and shared the images.

From our partners:

My collaborators and I recently studied how people evaluate the credibility of images that accompany online stories and what elements figure into that evaluation. We found that you’re far less likely to fall for fake images if you’re more experienced with the internet, digital photography and online media platforms – if you have what scholars call “digital media literacy.”

Who is duped by fakes?

Were you duped? Both of the images are fake.

We wanted to find out how much each of several factors contributed to the accuracy of people’s judgment about online images. We hypothesized that the trustworthiness of the original source might be an element, as might the credibility of any secondary source, such as people who shared or reposted it. We also anticipated that the viewer’s existing attitude about the depicted issue might influence them: If they disagreed with something about what the image showed, they might be more likely to deem it a fake and, conversely, more likely to believe it if they agreed with what they saw.

In addition, we wanted to see how much it mattered whether a person was familiar with the tools and techniques that allow people to manipulate images and generate fake ones. Those methods have advanced much more quickly in recent years than technologies that can detect digital manipulation.

Until the detectives catch up, the risks and dangers remain high of ill-intentioned people using fake images to influence public opinion or cause emotional distress. Just last month, during the post-election unrest in Indonesia, a man deliberately spread a fake image on social media to inflame anti-Chinese sentiment among the public.

Our research was intended to gain insight on how people make decisions about the authenticity of these images online.

Testing fake images

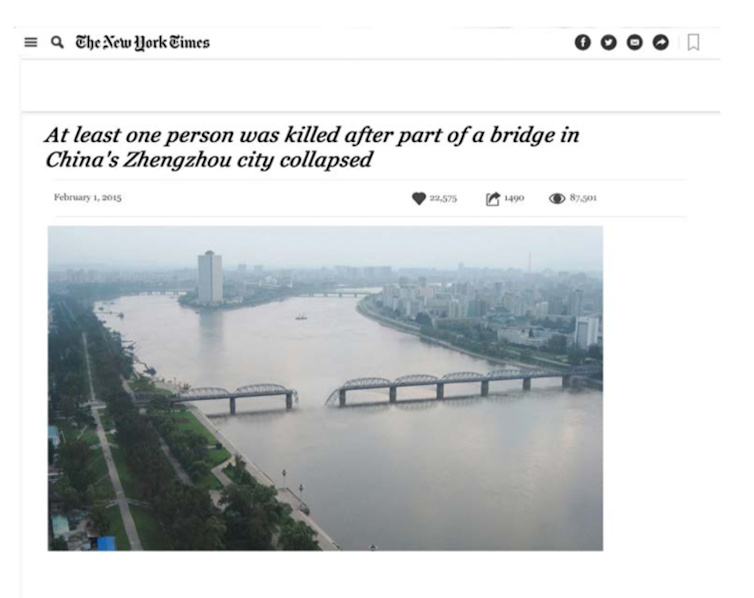

For our study, we created six fake photos on a diverse set of topics, including domestic and international politics, scientific discovery, natural disaster and social issues. Then we created 28 mock-up compositions of how each of those photos might appear online, such as shared on Facebook or published on The New York Times website.

Each mock-up presented a fake image accompanied by a short textual description about its content and a few contextual cues and features such as the particular place it purportedly appeared, information on what its source was and whether anyone had reshared it – as well as how many likes or other interactions had happened.

All of the images and accompanying text and information were fabrications – including the two at the top of this article.

We used only fake images to avoid the possibility that any participants might have come across the original image before joining our study. Our research did not examine a related problem known as misattribution, where a real image is presented in an unrelated context or with false information.

We recruited 3,476 participants from Amazon Mechanical Turk, all of whom were at least 18 and lived in the U.S.

Each research participant first answered a randomly ordered set of questions regarding their internet skills, digital imaging experience and attitude toward various sociopolitical issues. They were then presented with a randomly selected image mock-up on their desktop and instructed to look at the image carefully and rate its credibility.

Context didn’t help

We found that participants’ judgments of how credible the images were didn’t vary with the different contexts we put them in. When we put the picture showing a collapsed bridge in a Facebook post that only four people had shared, people judged it just as likely to be fake as when it appeared that image was part of an article on The New York Times website.

Instead, the main factors that determined whether a person could correctly perceive each image as a fake were their level of experience with the internet and digital photography. People who had a lot of familiarity with social media and digital imaging tools were more skeptical about the authenticity of the images and less likely to accept them at face value.

We also found out that people’s existing beliefs and opinions greatly influenced how they judged the credibility of images. For example, when a person disagreed with the premise of the photo presented to them, they were more likely to believe it was a fake. This finding is consistent with studies showing what is called “confirmation bias,” or the tendency for people to believe a piece of new information is real or true if it matches up with what they already think.

Confirmation bias could help explain why false information spreads so readily online – when people encounter something that affirms their views, they more readily share that information among their communities online.

Other research has shown that manipulated images can distort viewers’ memory and even influence their decision-making. So the harm that can be done by fake images is real and significant. Our findings suggest that to reduce the potential harm of fake images, the most effective strategy is to offer more people experiences with online media and digital image editing – including by investing in education. Then they’ll know more about how to evaluate online images and be less likely to fall for a fake.![]()

Mona Kasra, Assistant Professor of Digital Media Design, University of Virginia

This article is republished from The Conversation under a Creative Commons license. Read the original article.

For enquiries, product placements, sponsorships, and collaborations, connect with us at [email protected]. We'd love to hear from you!

Our humans need coffee too! Your support is highly appreciated, thank you!