Real-world cases and expert opinions about the present and future of audio deepfakes and AI-powered fraud in particular and how to use AI to build a strong defense against malicious AI.

John Dow, the CEO of an unnamed UK-based energy firm, once got a call from his boss that he wishes he’d never answered. Being confident he was talking to the CEO of the firm’s German parent company, he followed the instruction to immediately transfer €220,000 (app. $243,000) to a Hungarian supplier’s bank account.

From our partners:

Having completed a transaction, John got another call from the boss confirming the reimbursement. However, he noticed that the purported reimbursement hadn’t gone through, and the call had been made from an Austrian phone number. At this point, John got the third call seeking a follow-up payment. That’s where he went sceptical and sounded the alarm.

The investigation found that the voice in the call belonged to a hacker using AI voice technology to spoof John’s boss. In fact, the fake voice was so close to the real one that John could recognize the subtle German accent and the man’s voice “melody”.

Audio deepfakes are gaining traction and that’s creepy

According to Forbes, audio deepfakes are extremely hard to catch red-handed. Victims rarely have any evidence as they don’t typically record inbound calls, and the technology detecting synthetic speech in real-time is still in its infancy. As a result, the actual number of cyber fraud cases based on audio deepfakes remains very low. According to antivirus provider Symantec, in 2020, there were only three registered cases where audio deepfakes were used against their clients.

“Applying machine learning technology to spoof voices makes cybercrime easier,” says Irakli Beridze, head of the Center on AI and Robotics at the UN Interregional Crime and Justice Research Institute.

I’ve reached out to Jacob Sever, co-founder and CPO at Sumsub, an identity verification and compliance company, for a comment on the future of deepfakes.

“It still takes plenty of effort to make a truly realistic deepfake. But I believe that, in the future, creating them will take just a few clicks. Indeed, the deepfakes of the future will be so high-quality that you won’t be able to distinguish them from a real individual.

Once deepfakes can be made easily, we’ll be faced with a game of cat-and-mouse. That’s because the only way to fight malicious deepfakes would be through the same neural networks that create them. So, as deepfake and neural network technology become more sophisticated, we’ll have to upgrade our defences constantly.

Another plausible scenario of malicious AI usage that can be detrimental for business is robocalling.

If you get a call, answer it and hear a recorded message instead of a live person, it’s a robocall. If you get many robocalls trying to sell you something, such calls are most likely illegal and are scams.

According to the Federal Communications Commission, robocalling is one of the biggest consumer complaints they receive daily.

How does robocalling work?

Scammers use AI and ML to fully automate their robocalling systems and discover types of victims that are likely to respond to their fraud or better understand which logic and arguments would lead to more successful shenanigans. With an abundance of data points, cybercriminals refine their scamming schemes and wage more advanced and sophisticated attacks.

I’ve talked to Nelson Sherwin, General Manager of PEO Companies that provide HR and regulation compliance services to businesses. The company fell victim to a flurry of autodialer calls early in 2020.

“Our old telephony system was one we somewhat inherited with our space, and it was working fine for us until this. There was no real way for our IT department to manage the influx of calls adequately.

It very quickly began to impact my employees’ capability to do business with our clients. Employees essentially had to disconnect their phones for several hours over a few days while working out a solution. We hastily but successfully switched to a software VoIP solution, which allowed our IT team to use a much better call management environment while providing the granularity needed to block the incoming calls much quicker.

Eventually, after what I assume were hundreds of repeated attempts that failed, they gave up on trying to bombard our phones again.”

How organizations use AI and ML to fight AI-driven cyber fraud and deepfakes

Ragavan Thurairatnam, co-founder and Chief of Machine Learning for Dessa, a startup currently working on developing AI for detecting audio deepfakes, believes using a traditional software-based approach would make it extremely difficult to figure out the rules to write in order to catch deepfakes.

“On top of this, deepfake technology will constantly change, and traditional software would have to be rewritten by hand every time,” explains Thurairatnam. “AI, on the other hand, can learn to detect deepfakes on its own as long as you have enough data. In addition, it can adapt to new deepfake techniques as they surface even when detection is difficult to human eyes.”

To build public awareness about the risks of AI-powered synthetic speech, a few months ago, Dessa shared their own example of the technology with the broader audience. Using a proprietary speech synthesis model, they built RealTalk, a recreation of the popular podcaster Joe Rogan’s voice.

How deepfake detector works

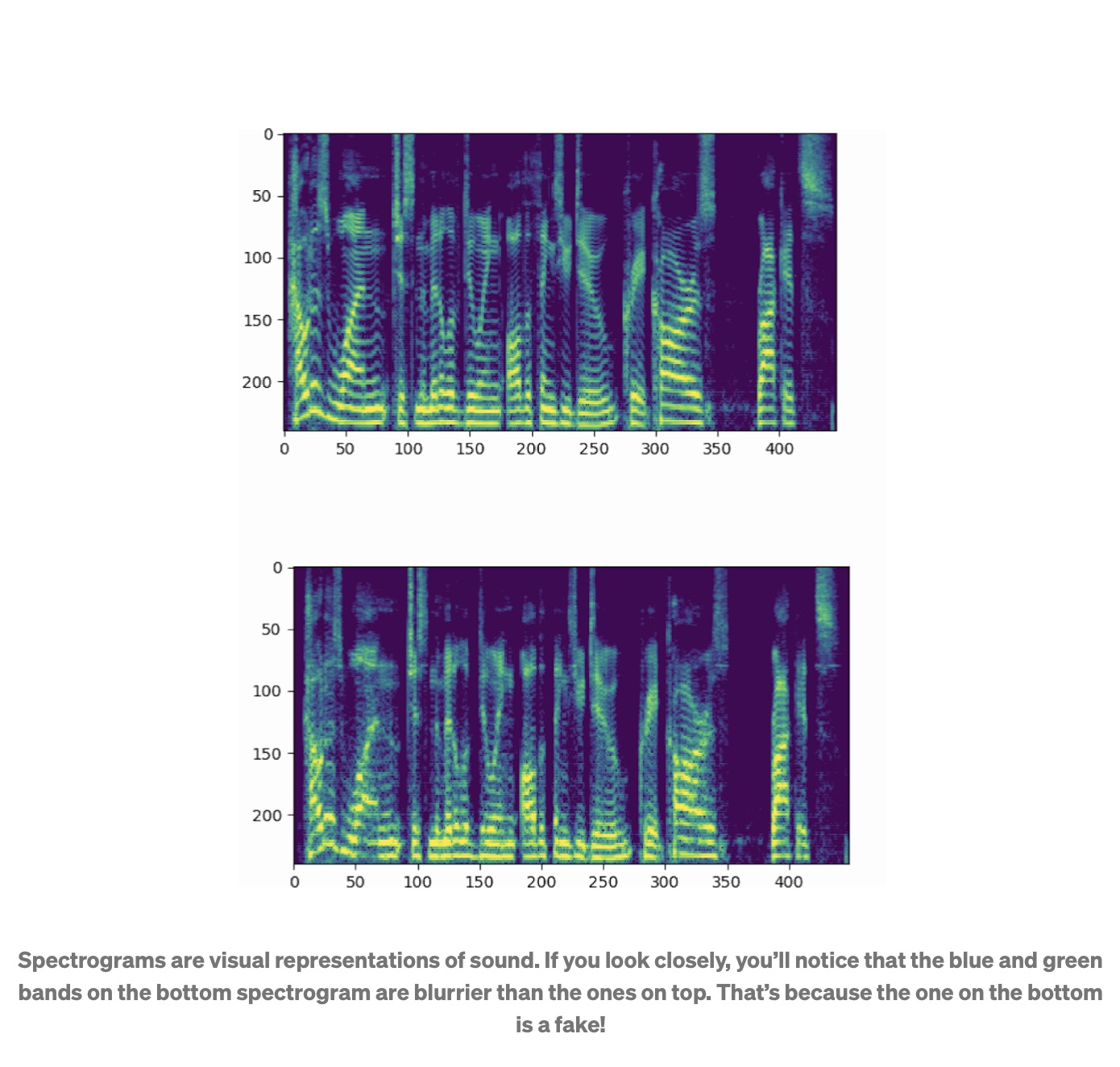

To distinguish between real and fake audio, the detector technology uses visual representations of spectrograms, which are also used to train speech synthesis models. While sounding almost identical to the unsuspecting ear, spectrograms of fake versus real audio differ.

Engineers at Dessa trained their detector on Google’s 2019 AVSSpoof dataset containing over 25,000 audio clips featuring fake and real voices of a great variety of male and female speakers.

The company built a deep neural network that uses Temporal convolution. Raw audio is pre-processed and then converted into a mel-frequency spectrogram, which serves as the input for the model. The model performs convolutions and uses masked pooling to prevent overfitting. The output is finally passed into a dense layer and a sigmoid activation function, which ultimately outputs a predicted probability between 0 (fake) and 1 (real).

As a result, the detector model can currently predict over 90% of the audio deepfakes, which puts it front and center in the effective combat of AI fraud.

Building predictive flags for AI fraud potential

When an enterprise creates insights for decision-making on fraudulent data, the consequences can be dire — product design misses the mark, while faulty competitive intelligence or bad brand experiences damage reputation and user loyalty. This inspires companies having the firsthand experience with AI-driven fraud to develop defensive technologies.

One example is DISCO, an audience insights platform that has modernized the panel-based approach to consumer surveys. In particular, the company employed Cloud-based technology allowing users to easily participate in research anywhere on any device. DISQO asks people if they would be willing to share their opinions and online behaviors, and rewards them for this.

“This is the antithesis of prevalent ad tech solutions that use technology to deduce consumer identity and follow them online without their full understanding or consent,” says Bonnie Breslauer, DISQO’s Chief Customer Officer.

DISQO’s approach has skyrocketed in popularity, with more than 10M users to date and more than $25M in cash rewards paid out.

But wherever money is to be made, thieves are sure to follow. Driven by the allure of the compensation for survey participation and likely spurred by the pandemic, fraudsters generated a huge spike in fraudulent activity in 2020. This inspired DISQO to lead in fighting fraud by developing defensive technologies that keep cheaters out of their panel.

DISQO’s data scientists built predictive flags for fraud potential by studying good and bad interactions with their platform. As they needed more computational power, they moved to Spark Engine, which gave the team the needed “horsepower” for next-level machine learning. DISQO created a scoring system to rank panelists’ behaviors that might indicate fraudulent activity. Using Bayesian optimization, the simple initial grading system was evolved into a model for maximum fraud detection, which continuously improves through ML.

In less than six months, DISQO’s data science team achieved its best results by solving the optimal fraud coverage segment and training the company’s machine learning models on a hand-picked number of classifiers. The team constructed a fraud vector of four dimensions that consisted of the probabilities retrieved from the hand-picked classifiers. One of the fraud vector’s dimensions contained the CNN probability; the other two were XGBoost and Logistic based probabilities. The system learned the separation in the data between “good” and “bad” panelists.

DISQO now compares fraud vector magnitudes on every user to assess overall fraud risk. This allows DISQO to detect and remove “bad actors” while preserving a high-quality experience for the rest of the community.

Final Thoughts

Shane Lewin, VP of AI/ML at GSK and the former Principal at Microsoft AI and Research Group, believes there’s nothing inherently malicious about AI.

“Facebook currently runs an AI-powered feed that is constantly learning how to keep you engaged on the platform. It exploits parts of your brain that reinforce community and social structure with the intent for more screen time. Those AI models are quite effective but not without cost — a convincing and overwhelming body of evidence suggests it’s quite harmful to your productivity, relationships, happiness, and even health.

I’ve not personally been subjected to deepfakes, but I’ve seen the impact firsthand — a targeted and deliberate deepfake campaign leads to real reputation and health impacts to an innocent victim.

Racial and gender bias is rampant in the search algorithms on career pages, notably Linkedin. This is actually not malicious in intent, but the AI has learned the behavior of the bias in users, based on their clicks; or the biases of the people who trained it.

There’s nothing inherently malicious about AI — it’s just software that gets iteratively better at some defined function. The analogy is a human businessperson trying to make more money. They might try a wide variety of strategies and increase usage and investment in what works, but our value system constrains most humans.

There’re lines we won’t cross – things that are too odious to profit from. These rules — we call them values — limit our profit to a degree, but society is better for having them. Alas, not all humans are self constrained. There’s a class of us that we have names for, ranging from selfish to narcissistic to psychopaths, but that all describes individuals that are too lax with our values. Society has learned to reject these people, to a degree at least.

AI is the same. If it’s not constrained with some value system, it will adopt whatever strategy is optimal without any sense of values or ethics. The Facebook algorithm says maximize screen time when it should say maximize screen time within constraints above, which are dangerous to mental health (or better yet, just turn the personalized feed off). Linkedin should maximize relevance without any signals that might convey bias (such as user click data or racial identifiers). It’s quite possible to build ethical AI if you’re willing to put values above profit.“

I can’t agree more!

There are many malicious companies, led by malicious executives, deliberately maximizing profit in an evil way. And to date, there’s no better way to fight AI-based fraud than using AI.

Fight fire with fire!

This article is republished from hackernoon by Vik Bogdanov.

For enquiries, product placements, sponsorships, and collaborations, connect with us at [email protected]. We'd love to hear from you!

Our humans need coffee too! Your support is highly appreciated, thank you!