With Artificial Intelligence (AI) increasingly intertwining with human lives, it is also becoming increasingly important to address the ethical aspect of the use of this technology. As we know, human ethics is incredibly subjective and inconsistent. This makes it difficult to embed into some sort of code which AI could follow.

In their paper, World Economic Forum (WEF) proposes some ways of implementing ethics into AI. Here, we are going to condense the key issues discussed.

From our partners:

Ethical Concerns in AI applications

Drastic technological developments often render human jobs obsolete. The first concern, therefore, is that the increasing use of AI will lead to job losses. However, we can also view the increasing popularity of AI as opening a new door of opportunities which should balance out these losses.

Unlike job losses, there are some concerns that cannot be as easily remedied. For instance,

- in the event of AI failure, should the designer be held accountable, even though the machine decided autonomously?

- How can transparency be established in AI, when the massive and complex networks of the technology veils much of what the system does?

- As we grow more and more dependent on AI in our actions and decisions, will we lose our autonomy and social relationships?

- How can privacy be maintained with the highly information-driven AI, using massive amounts of our personal information?

- How can we make sure that AI will not be manipulated by attackers and in turn be used to manipulate others?

Means to implement ethics in AI applications

Despite all these concerns, we cannot deny the benefits that AI can offer us. With this, we have to minimize the risks of using this technology. Some traditional self-regulatory measures for AI are as follows.

01. Technical means and mechanisms

This involves embedding ethics into AI by design. Some methods being considered are,

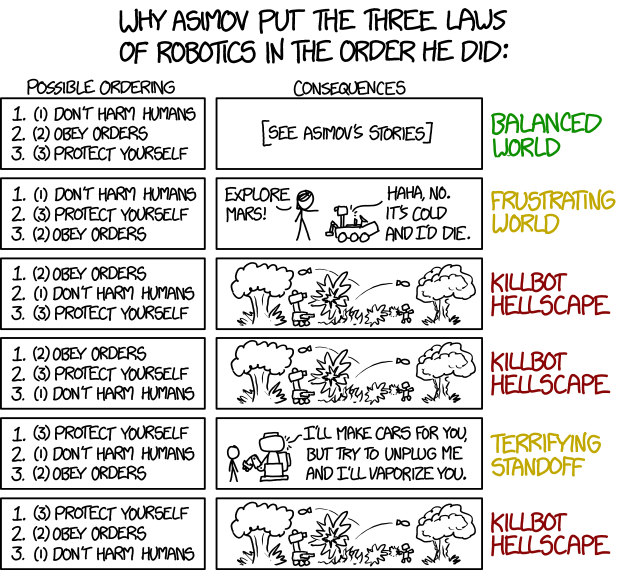

Bottom-up versus top-down approaches

The designers may embed the ethical principles into the AI directly, this is known as the top-down approach. On the other hand, the AI can be instructed to observe humans and mimic their behaviour. This is the bottom-up approach.

The advantage of the top-down approach is that the designer has more control in the principles that the AI will adapt. By using a bottom-up approach, the AI will not comply with what is common to the humans, which as we know isn’t necessarily the ethical choice.

Casuistic Approach

This approach involves identifying all key situations in which the AI will have to decide programming their responses. The problem with this approach is that the self-teaching AI may eventually decide that it knows better than its original programmers, leading to non-compliance to the programmed responses.

Dogmatic Approach

This is the embedding the principles of a particular school of thought into the AI. The implementation of this approach remains a challenge, though. Moreover, even if this approach is successfully implemented, this runs the risk of an AI slavishly compliant on the principles of the particular school.

Implementing AI on a technical meta-level

This method employs the use of a monitoring system that is also AI-driven. A “guardian AI” can be developed to monitor, control, and report the behaviour of the AIs it oversees.

As is, we see that the technical means of regulation is insufficient. These methods assume that the humans involved in the development will design the machines in an ethically aligned manner.

02. Policy-making instruments

In order to ensure that humans will comply to an ethical standard, the technical means of regulation must be used in conjunction with policy-making instruments. This includes,

- Legislation

- International resolutions and treaties

- Bilateral investment treaties

- Self-regulation and standardization

- Certification

- Contractual rules

- Soft law

- Agile governance

- Monetary incentives

Two practical approaches to implement ethics in AI systems

As an answer to the difficulty of embedding ethics into AI systems, two practical approaches of implementing ethics into AI and other autonomous systems are being put forward:

01. The IEEE Global Initiative

The Institute of Electrical and Electronics Engineers (IEEE) Initiative on Ethics of Autonomous and Intelligent Systems aims to educate, train, and empower designers to put ethics on the top of their considerations in developing AI.

The product of their initiative is the P7000 series, a set of documents containing specific ethical operational standards which designers can comply to.

02. The WEF project on Artificial Intelligence and Machine Learning

Some governance projects for AI and other technologies WEF is working on in cooperation with governments, businesses and civil societies include,

Unlocking public-sector AI

By implementing baseline standards for the public sector’s responsible acquisition and deployment of AI, concerns over the use of AI in governments will be dispelled. This, apart from empowering governments with new capabilities, will also prod private sectors to adopt the standards.

AI Board leadership toolkit

This is a set of tools which will enable board members and decision-makers to understand the benefits and risks of AI. This will also shed light on the importance of responsible design, development, and deployment of AI.

Generation AI

This project focuses on developing protective measures for children, in order to address concerns on privacy and security.

Teaching AI ethics

The WEF Global Future Council on Artificial Intelligence and Robotics is creating material which will aid faculty who desires to integrate ethics into their AI courseworks.

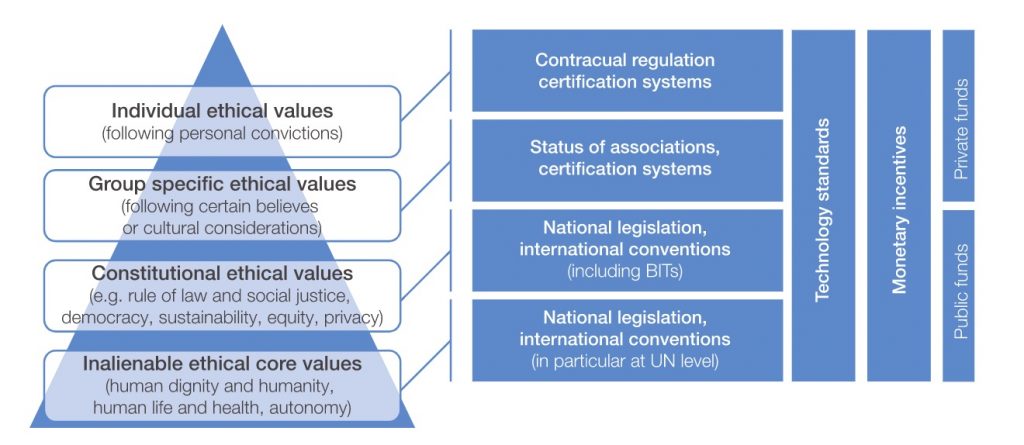

Determining the appropriate regulation design

WEF proposes that policy-makers should take into account ethical diversity and be guided by a graded governance system to pinpoint the appropriate method of regulation:

In order to ensure that the benefits of the use of AI can be maximized, we have to ensure that the risks it poses are minimized. Policy-makers and designers should start as taking into consideration the ethical implications before it is too late.

For enquiries, product placements, sponsorships, and collaborations, connect with us at [email protected]. We'd love to hear from you!

Our humans need coffee too! Your support is highly appreciated, thank you!