As the United States heads into a presidential election at a time when it’s harder than ever to root out fake news online, Microsoft is releasing a new tool aimed at better detecting AI-generated deepfakes.

The software, which is the product of a partnership between the tech giant and the hybrid nonprofit-commercial enterprise AI Foundation, can determine the likelihood that a face in a still image or video was generated or manipulated using AI. It is currently only available to select organizations “involved in the democratic process,” including news outlets and political campaigns.

Microsoft is also partnering with media companies like The New York Times, BBC and CBC/Radio-Canada on a more extensive effort to test the technology and form a set of standards around authenticating media in the digital age. The company is also releasing a service that will allow content producers to certify that the videos they release are authentic.

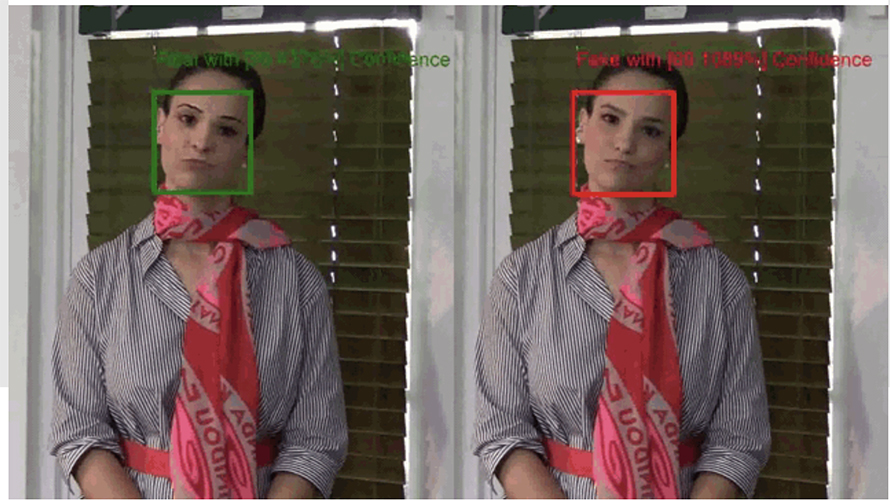

The deepfake detection tool, called Microsoft Video Authenticator, works by analyzing imagery for subtle distortions or irregularities that may be imperceptible to the casual observer. It draws on a system created by the AI Foundation called Reality Defender, which the organization already provides to media outlets as part of its core business.

“Separating fact from fiction online represents one of the greatest challenges for democracy,” Tom Burt, corporate vice president for security and trust at Microsoft, said in a statement. “Reality Defender has the ability to help campaigns, newsrooms, and others use a range of technology quickly and responsibly to determine the truth. We believe this partnership can provide to organizations an important tool fundamental to free and fair elections.”

Researchers recently ranked deepfakes as the number one crime and terrorism threat presented by AI-related technology due to their potential to be used in spreading dangerous misinformation as well as the possibility for blackmail or extortion. While manually manipulated media has become a flashpoint in the election in recent months, a recent study found that the primary nefarious use of deepfakes—footage fabricated specifically through machine learning—has been nonconsensual pornography.

BY PATRICK KULP