It’s no secret that algorithms run the world, powering everything from Google’s search results to Uber’s car-pool capabilities. But farther under the hood are a more fundamental set of algorithms that underpin computing: if Google PageRank is the engine, these algorithms are the parts it’s built from.

To date, there haven’t been any long-term studies looking at where all these algorithms are being created. A new MIT-led study reveals that many of these parts were made in America– some by native-born Americans but increasingly also by immigrants working at U.S. institutions.

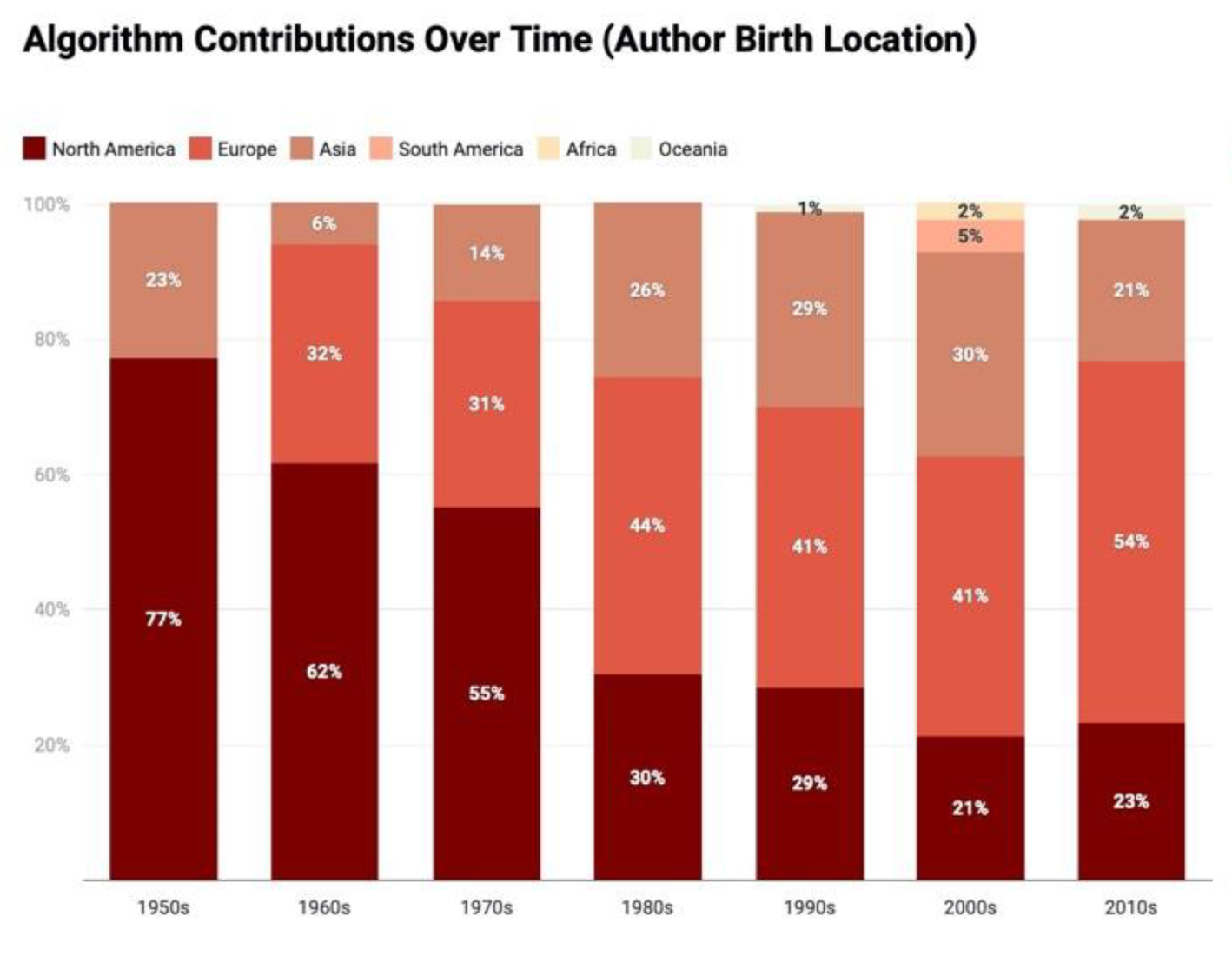

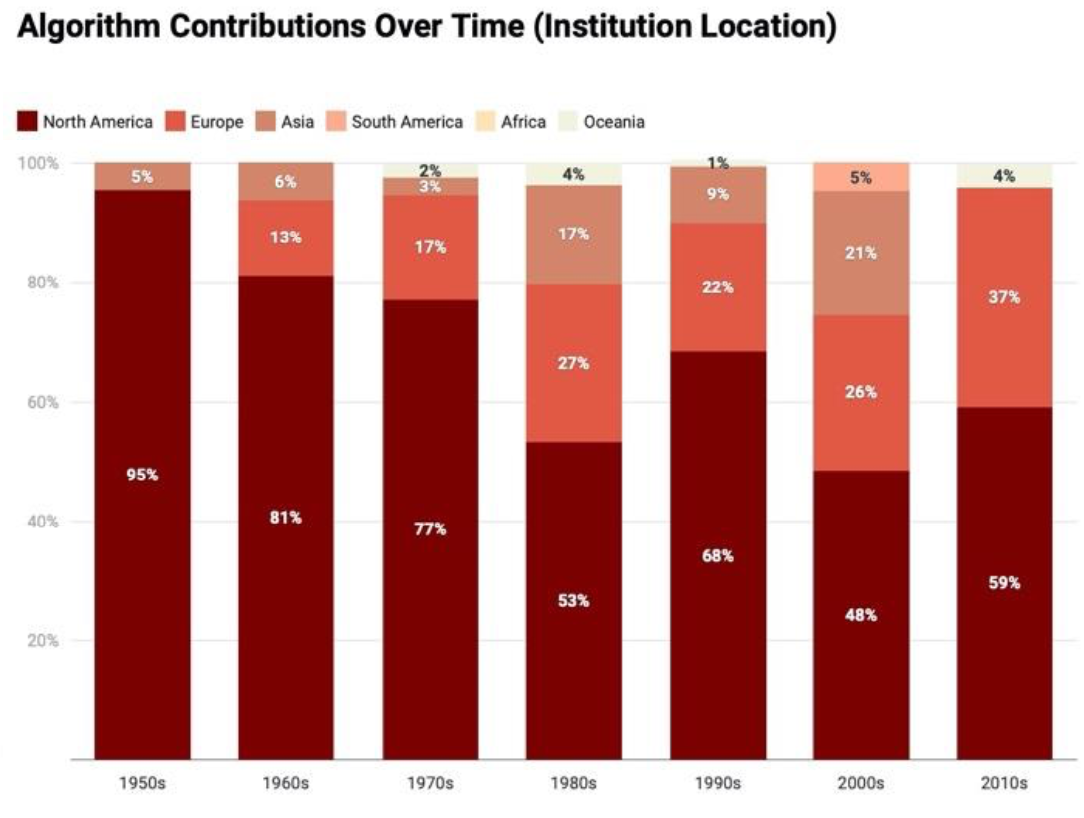

By analyzing improvements over 70 years in the 128 most important “families” of algorithms, researchers found that 64 percent of the improvements have come from researchers at American institutions, but that 40 percent of these are from scientists that originally hailed from other countries. In recent decades, the share of U.S.-based algorithmic innovations coming from foreign-born researchers has only become more important.

“If we want the United States to continue to be ground zero for computer science, we need to make sure that our policies make it easy to continue to bring international researchers to our institutions,” says Neil Thompson, a research scientist at MIT CSAIL and the Sloan School of Management.

The study shows that algorithmic progress to date has been disproportionately Western-centric. Despite residents of North America and Europe making up only 15 percent of the world population, they have contributed more than three-quarters of the algorithms. The driving factor seems to be how wealthy a country is: GDP was found to be more important to producing important algorithms than a country’s population size.

“There’s a danger that algorithm development may suffer from the problem of ‘lost Einsteins,’ where those with natural talent in under-developed countries are unable to reach their full potential because of a lack of opportunity,” says Thompson.

“There’s a danger that algorithm development may suffer from the problem of ‘lost Einsteins,’ where those with natural talent in under-developed countries are unable to reach their full potential because of a lack of opportunity,” says Thompson.

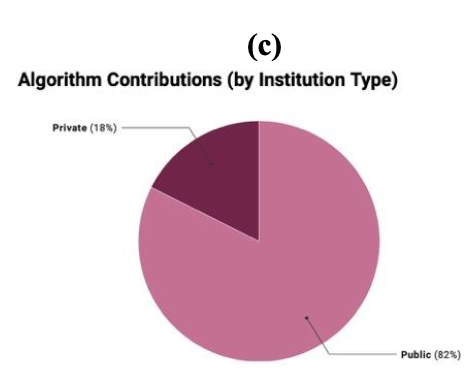

Another key finding highlighted the importance of federal funding for university research: 82 percent of the influential algorithms came from the work of nonprofits and public-facing institutions like universities, as opposed to private companies.

“Giving money to public institutions makes sense for this type of research,” says Thompson, who co-wrote the study with visiting Georgia Tech student Yash Sherry and former CSAIL researcher Shuning Ge. “It is much more efficient for society to have a university researcher discover a new algorithm and share it with the world than to have each company invent it separately.”

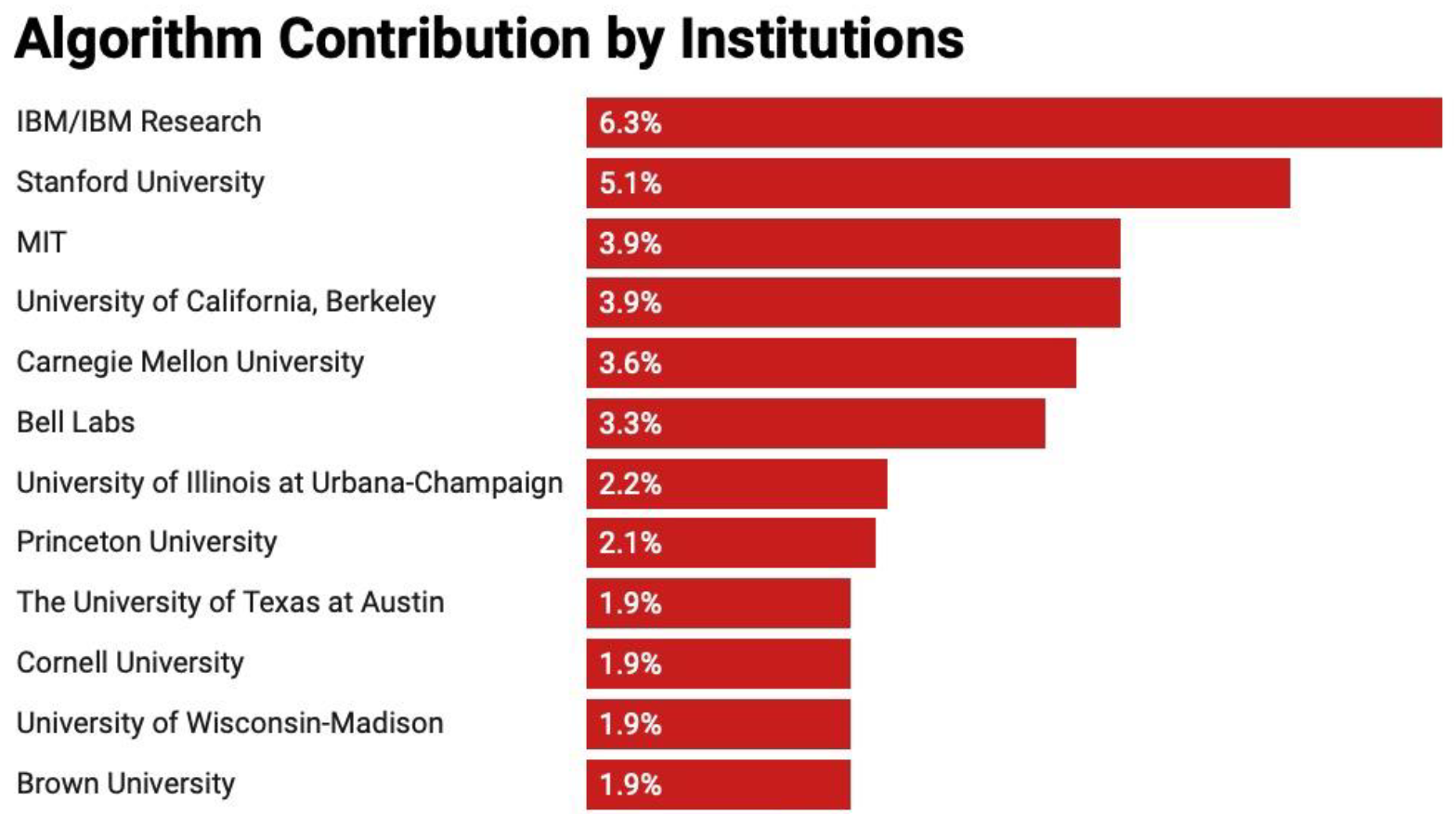

The five universities with the most contributions to the list were Stanford, MIT, the University of California at Berkeley, Carnegie Mellon, and the University of Illinois at Urbana–Champaign.

To develop the dataset, the team first poured over more than 1,000 research papers and 50 textbooks to create a list of about 300 algorithms that were either the first to solve particular problems, or improved state-of-the-art methods. These included everything from better list-sorting to the infamous “traveling-salesperson” problem, where the goal is to find the quickest route across multiple cities. From the team’s 300 algorithms, they ultimately analyzed a subset of 180 that could be sourced for information about authors and institutions.

Collectively, the researchers refer to this set of fundamental algorithms as “The Algorithm Commons” – because, like the Digital Commons, it represents advancements in knowledge whose benefits, they believe, can be widely shared.

“The great thing about algorithm improvement is that you get more output without having to put in more resources,” says Thompson. “Just as a productivity improvement for a business allows them to produce more output for a given set of inputs, an algorithmic improvement allows a computer to tackle bigger, harder problems for the same computational budget.”

The project coincides with ongoing work from Thompson and Sherry showing that algorithmic improvements have often rivaled and even exceeded the decades-long improvements in computer hardware that have come from Moore’s Law.

The team’s paper, just posted online, will be formally published in the February 2021 issue of the Global Strategy Journal. The project was supported in part by the Tides Foundation.

Source: MIT CSAIL