Can you fool Artificial Intelligence?

No?

Think again.

Three years ago, Apple launched IphoneX with cutting-edge facial recognition technology. This advanced AI technique (Face ID) replaced the old fingerprint recognition technology (Touch ID).

The latest technology claimed to be more secure and robust. However, shortly after the launch of Face ID, researchers from Vietnam breached it by designing a 3D face mask.

Such crafted attacks against ML-based AI systems come under the umbrella of a fascinating research field: adversarial machine learning. This attack against Face ID led to new debates in the field of cybersecurity: How secure are AI systems against adversarial attacks, and whether such attacks are practical in real-world scenarios or not.

In this article, we will explore the answers to these questions. We will further explore adversarial machine learning beyond cybersecurity — its application in AI software testing. But first, let’s understand what is adversarial machine learning.

What is Adversarial Machine Learning?

You are familiar with optical illusions. They play with our minds into seeing things that are not there. In adversarial machine learning, attackers design optical illusions for machine learning algorithms.

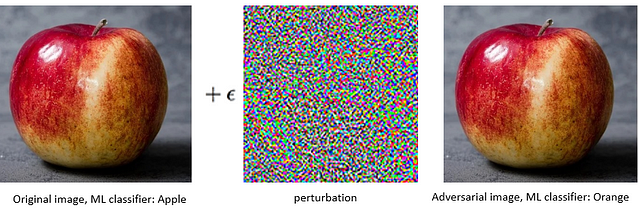

For example, say an AI system classifies apples and oranges. Now, look at the picture below. You can see that both are images of the same apple. However, the image of the apple on the right is crafted a little differently.

An attacker has added a tiny perturbation in this image, making the ML algorithm misclassify it to orange. The human eye cannot see any difference between the left and right apple. However, the AI system is fooled to see it as an orange.

The above illustration is inspired by the panda example given in this paper by the guru of adversarial machine learning: Ian Goodfellow.

This example seems pretty straightforward to understand right? There can be even simpler ways to sabotage AI systems.

There are also highly sophisticated attacks designed to evade machine learning and artificial intelligence systems.

In research, such attacks have proven to sabotage a variety of safety-critical systems, including self-driving cars and medical imaging systems. Let’s have a look at them:

Fooling AI: Evasion Attacks

In evasion attacks, attackers design adversarial examples that fool the AI model. For example, attackers can target self-driving cars by using stickers or paint on road signs.

The sign appears the same to the naked eye; however, a self-driving car may interpret it differently. This may cause deadly accidents. The example of the Face ID breach given at the start of this article is also a type of evasion attack.

There can be a variety of evasion attacks — from simple image manipulation to sophisticate white-box attacks.

Poisoning AI: Data Poisoning Attacks

In poisoning attacks, the threat actor can add incorrect examples in the training data, making a faulty, mutant model. The examples apparently seem harmless, and such attacks are usually launched on self-learning or reinforcement learning systems.

For example, back in 2016, an interactive, self-learning chatbot Tay was poisoned (trained) by the users into a racist Nazi!!

Rethinking AI Security and Software Testing

Adversarial machine learning is also being used by software engineers to test the robustness of AI systems. The researchers are combining software testing techniques with adversarial machine learning to generate and evaluate the test data.

Humans fight with the idea that whether seeing is believing or believing is seeing. In the case of ML-based AI, learning is seeing and learning is believing.

It is quite tricky how most of the complex AI systems learn. The key to secure and robust AI systems is thorough testing.

This article is republished from hackernoon.com