Scholars see deep learning’s pitfalls and limitations, the computer industry just sees a huge opportunity in matrix multiplications.

The era of deep learning began in 2006, when Geoffrey Hinton, a professor at the University of Toronto, who is one of the founders of that particular approach to artificial intelligence, theorized that greatly improved results could be achieved by adding many more artificial neurons to a machine learning program. The “deep” in deep learning refers to the depth of a neural network, how many layers of artificial neurons data is passed through.

Hinton’s insight led to breakthroughs in the practical performance of AI programs on tests such as the ImageNet image recognition task. The subsequent fifteen years have been called the deep learning revolution.

A report put out last week by Stanford University, in conjunction with multiple institutions, argues that the dominance of the deep learning approach may fade in coming years, as it runs out of answers for tough questions of building AI.

“The recent dominance of deep learning may be coming to an end,” write the report’s authors. “To continue making progress, AI researchers will likely need to embrace both general- and special-purpose hand-coded methods, as well as ever faster processors and bigger data.” says the AI100 report.

The report, known formally as “The One Hundred Year Study of AI,” is the second installment in what is planned to be a series of reports every five years on the state of the discipline. The report is put together by a collection of academics who make up a standing committee and organize workshops whose findings are summarized in the study.

The report’s prediction about deep learning may be premature, for the simple reason that unlike in past eras, when AI was on the outskirts of computer science, the mathematics that power deep learning have now become firmly embedded in the world of commercial computing.

Hundreds of billions of dollars in market value is now ascribed to deep learning fundamentals. Deep learning, unlike any AI approach before it, is now the establishment.

Decades ago, companies with ambitious computing quests went out of business for lack of money. Thinking Machines was the gem of the 1980s – 1990s artificial intelligence quest. It went bankrupt in 1994 after burning through $125 million in venture capital.

The idea of today’s startups going bankrupt seems a lot less likely, stuffed as they are with unprecedented amounts of cash. Cerebras Systems, Graphcore, SambaNova Systems, have gotten billions, collectively, and have access to lots more money, both in debt and equity markets.

More important, the leader in AI chips, Nvidia, is a powerhouse that is worth $539 billion in market value and makes $10 billion annually selling chips for deep learning training and inference. This is a company with a lot of runway to build more and sell more and get even richer off the deep learning revolution.

Why is the business of deep learning so successful? It’s because deep learning, regardless of whether it actually leads to anything resembling intelligence, has created a paradigm to use faster and faster computing to automate a lot of computer programming. Hinton, along with his co-conspirators, have been honored for moving forward computing science regardless of what AI scholars may think of their contribution to AI.

The authors of the AI100 report make the case that deep learning is running up against practical limits in its insatiable desire for data and compute power. The authors write,

But now, in the 2020s, these general methods are running into limits—available computation, model size, sustainability, availability of data, brittleness, and a lack of semantics—that are starting to drive researchers back into designing specialized components of their systems to try to work around them.

All that may well be true, but, again, that is a call to arms that the computer industry is happy to spend billions answering. The tasks of deep learning have become the target for the the strongest computers. AI is no longer a special discipline, it is the heart of computer engineering.

It takes just fourteen seconds for one of the fastest computers on the planet, built by Google, to automatically be “trained” to solve ImageNet, according to the benchmark results earlier this year of the MLPerf test suite. That is not a measure of thinking, per se, it is a measure of how fast a computer can transform input into output — pictures in, linear regression answer out.

All computers, ever since Alan Turing conceived of them, do one thing and one thing only, they transform a series of ones and zeros into a different series of ones and zeros. All that deep learning is, is a way for the computer to automatically come up with the rules of transformation rather than have a person specify the rule.

What Google and Nvidia are helping to build is simply the future of all computers. Every computer program can benefit from having some of its transformations automated, rather than being laboriously coded by a person.

The incredibly simple approach that underlies that automation, matrix multiplication, is sublime because it is such a basic mathematical operation. It’s an easy target for computer makers.

That means that every chip is becoming a deep learning chip, in the sense that every chip is now a matrix multiplication chip.

“Neural nets are the new apps,” said Raja M. Koduri, senior vice president and general manager of Intel’s Accelerated Computing Systems and Graphics Group, recently told ZDNet. “What we see is that every socket, it’s not CPU, GPU, IPU, everything will have matrix acceleration,” said Koduri.

When you have a hammer, everything is a nail. And the computer industry has a very big hammer.

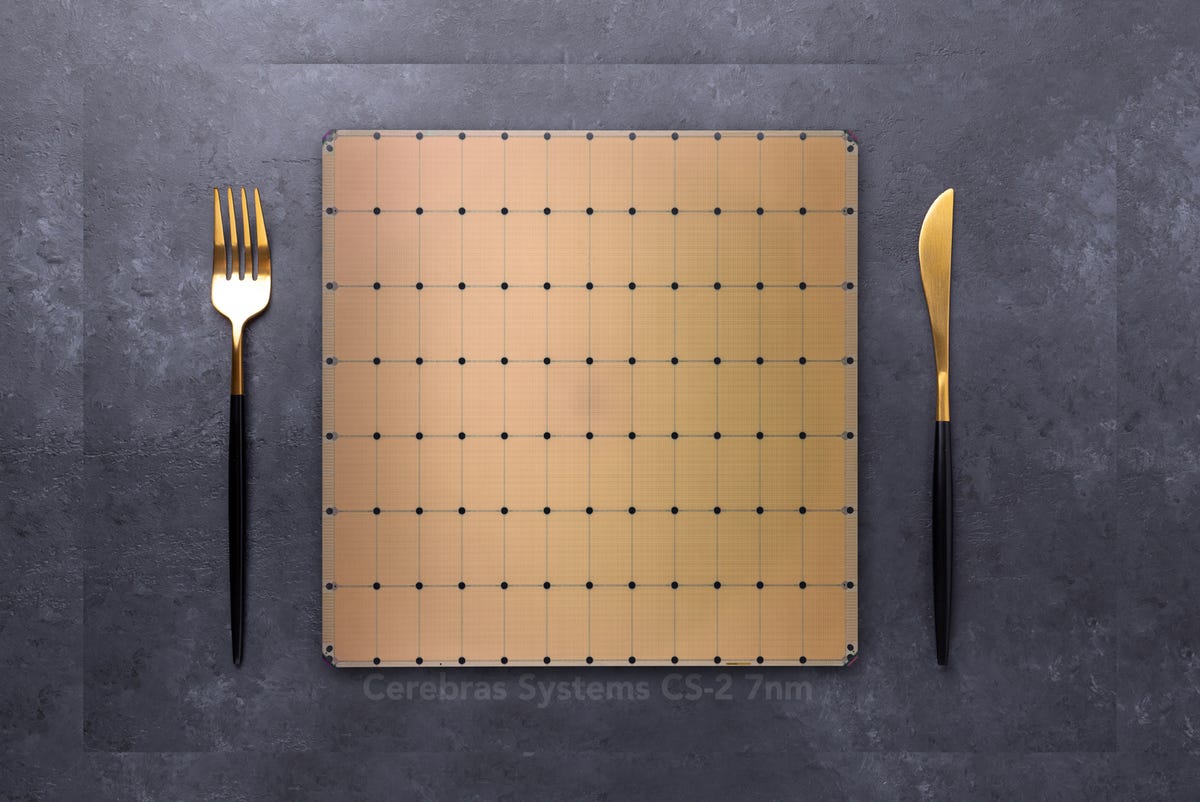

Cerebras’s WSE chip, the biggest semiconductor in the world, is a giant machine for doing one thing over and over, the matrix multiplications that power deep learning.

The benchmark test MLPerf, has become the yardstick by which companies purchase computers, based on their speed of deep learning compute. The deep learning revolution has become the deep learning industry as it has established matrix math as the new measure of compute.

The scholars who assembled the AI100 report are making a point about research directions. Many scholars are concerned that deep learning has gotten no closer to the goal of understanding nor of achieving human-like intelligence, and doesn’t seem like it will any time soon.

Deep learning critics such as NYU psychologist Gary Marcus have organized whole seminars to explore a way to merge deep learning with other approaches, such as symbolic reasoning, to find a way past what seems the limited nature of deep learning’s monotonic approach.

The critique is elegantly encapsulated by one of the report’s study panel members, Melanie Mitchell of the Santa Fe Institute and Portland State University. Mitchell wrote in a paper this year, titled “Why AI is harder than we think,” that deep learning is running up against severe limitations despite the optimistic embrace of the approach. Mitchell cites as evidence the fact that much-ballyhooed goals such as the long-heralded age of self-driving cars have failed to materialize.

As Mitchell argues, quite astutely, deep learning barely knows how to talk about intelligence, much less replicate it:

It’s clear that to make and assess progress in AI more effectively, we will need to develop a better vocabulary for talking about what machines can do. And more generally, we will need a better scientific understanding of intelligence as it manifests in different systems in nature. This will require AI researchers to engage more deeply with other scientific disciplines that study intelligence.

All that is no doubt true, and yet, the computer industry loves incrementalism. Sixty years of making integrated circuits double in speed and double in speed have gotten the computer world hooked on things that can be easily replicated. Deep learning, based on a sea of matrix multiplications, again, is a sublime target, a terrifically simple task to run faster and faster.

As long as computer companies can keep churning out improvements in matrix acceleration, the deep learning industry, as the mainstream of compute, will have a staying power that has to be reckoned with.

This article was originally appeared in ZDNet.