Intel Labs, in collaboration with the Italian Institute of Technology and the Technical University of Munich, has introduced a new approach to neural network-based object learning. It specifically targets future applications like robotic assistants that interact with unconstrained environments, including in logistics, healthcare or elderly care. This research is a crucial step in improving the capabilities of future assistive or manufacturing robots. It uses neuromorphic computing through new interactive online object learning methods to enable robots to learn new objects after deployment.

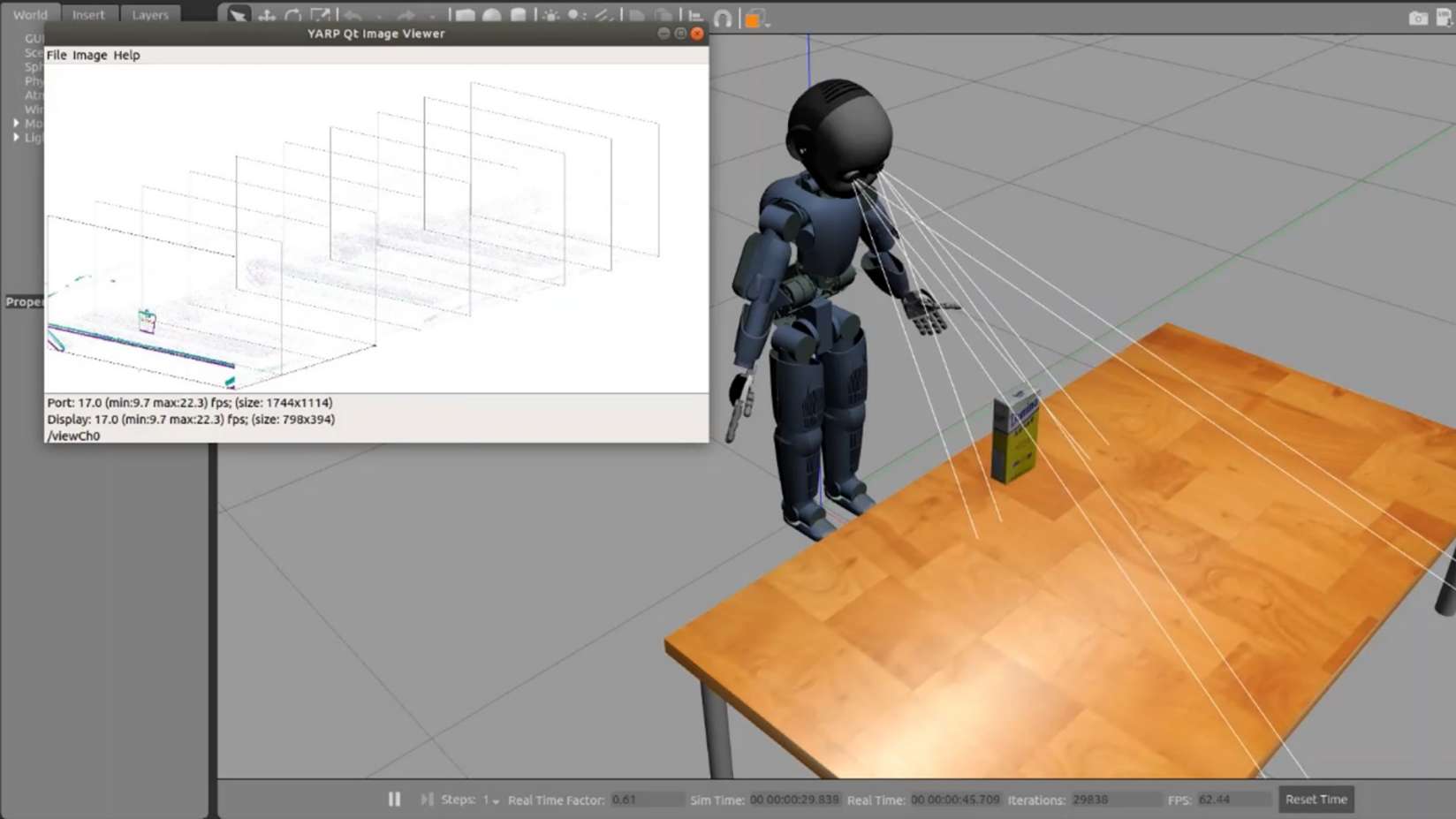

Using these new models, Intel and its collaborators successfully demonstrated continual interactive learning on Intel’s neuromorphic research chip, Loihi, measuring up to 175x lower energy to learn a new object instance with similar or better speed and accuracy compared to conventional methods running on a central processing unit (CPU). To accomplish this, researchers implemented a spiking neural network architecture on Loihi that localized learning to a single layer of plastic synapses and accounted for different object views by recruiting new neurons on demand. This enabled the learning process to unfold autonomously while interacting with the user.

The research was published in the paper “Interactive continual learning for robots: a neuromorphic approach,” which was named “Best Paper” at this year’s International Conference on Neuromorphic Systems (ICONS) hosted by Oak Ridge National Laboratory.

“When a human learns a new object, they take a look, turn it around, ask what it is, and then they’re able to recognize it again in all kinds of settings and conditions instantaneously,” said Yulia Sandamirskaya, robotics research lead in Intel’s neuromorphic computing lab and senior author of the paper. “Our goal is to apply similar capabilities to future robots that work in interactive settings, enabling them to adapt to the unforeseen and work more naturally alongside humans. Our results with Loihi reinforce the value of neuromorphic computing for the future of robotics.”

For further exploration, read about Intel Labs’ research on Intel.com’s neuromorphic computing page.