Google claims to have demonstrated something called “quantum supremacy”, in a paper published in Nature. This would mark a significant milestone in the development of a new type of computer, known as a quantum computer, that could perform very difficult calculations much faster than anything possible on conventional “classical” computers. But a team from IBM has published their own paper claiming they can reproduce the Google result on existing supercomputers.

While Google vs. IBM might make a good story, this disagreement between two of the world’s biggest technology companies rather distracts from the real scientific and technological progress behind both teams’ work. Despite how it might sound, even exceeding the milestone of quantum supremacy wouldn’t mean quantum computers are about to take over. On the other hand, just approaching this point has exciting implications for the future of the technology.

Quantum computers represent a new way of processing data. Instead of storing information in “bits” as 0s or 1s like classical computers do, quantum computers use the principles of quantum physics to store information in “qubits” that can also be in states of 0 and 1 at the same time. In theory, this allows quantum machines to perform certain calculations much faster than classical computers.

In 2012, Professor John Preskill coined the term “quantum supremacy” to describe the point when quantum computers become powerful enough to perform some computational task that classical computers could not do in a reasonable timeframe. He deliberately didn’t require the computational task to be a useful one. Quantum supremacy is an intermediate milestone, something to aim for long before it is possible to build large, general-purpose quantum computers.

In its quantum supremacy experiment, the Google team performed one of these difficult but useless calculations, sampling the output of randomly chosen quantum circuits. They also carried out computations on the world’s most powerful classical supercomputer, Summit, and estimated it would take 10,000 years to fully simulate this quantum computation. IBM’s team have proposed a method for simulating Google’s experiment on the Summit computer, which they estimated would take only two days rather than 10,000 years.

Random circuit sampling has no known practical use, but there are very good mathematical and empirical reasons to believe it is very hard to replicate on classical computers. More precisely, for every additional qubit the quantum computer uses to perform the calculation, a classical computer would need to double its computation time to do the same.

The IBM paper does not challenge this exponential growth. What the IBM team did was find a way of trading increased memory usage for faster computation time. They used this to show how it might be possible to squeeze a simulation of the Google experiment onto the Summit supercomputer, by exploiting the vast memory resources of that machine. (They estimate simulating the Google experiment would require memory equivalent to about 10m regular hard drives.)

The 53-qubit Google experiment is right at the limit of what can be simulated classically. IBM’s new algorithm might just bring the calculation within reach of the world’s biggest supercomputer. But add a couple more qubits and the calculation will be beyond reach again. The Google paper anticipates this, stating: “We expect that lower simulation costs than reported here will eventually be achieved, but we also expect that they will be consistently outpaced by hardware improvements on larger quantum processors.”

Whether this experiment is just within reach of the world’s most powerful classical supercomputer, or just beyond, isn’t really the point. The term “supremacy” is somewhat misleading in that it suggests a point when quantum computers can outperform classical computers at everything. In reality, it just means they can outperform classical computers at something. And that something might be an artificial demonstration with no practical applications. In retrospect, the choice of terminology was perhaps unfortunate (though Preskill recently wrote a reasoned defence of it).

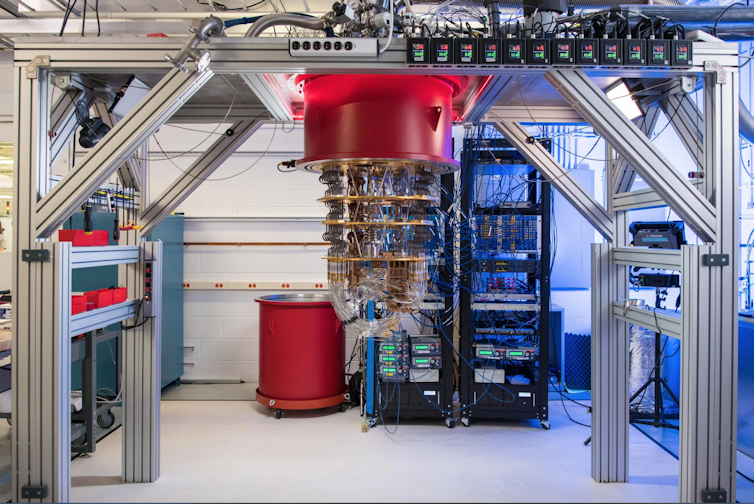

Impressive science

Yet Google’s work is a significant milestone. With quantum hardware reaching the limits of what can be matched classically, it opens up the intriguing possibility that these devices – or devices only slightly larger – could have practical applications that cannot be done on classical supercomputers. On the other hand, we don’t know of any such applications yet, even for devices with a few hundred qubits. It’s a very interesting and challenging scientific question, and an extremely active area of research.

As such, the Google results are an impressive piece of experimental science. They do not imply that quantum computers are about to revolutionise computing overnight (and the Google paper never claims this). Nor are these useless results that achieve nothing new (and the IBM paper doesn’t claim this). The truth is somewhere in between. These new results undoubtedly move the technology forward, just as it has been steadily progressing for the last couple of decades.

As quantum computing technology develops, it is also pushing the design of new classical algorithms to simulate larger quantum systems than were previously possible. IBM’s paper is an example of that. This is also useful science. Not only in ensuring quantum computing progress is continually being fairly benchmarked against the best classical techniques, but also because simulating quantum systems is itself an important scientific computing application.

This is how science and technology progresses. Not in one dramatic and revolutionary breakthrough, but in a whole series of small breakthroughs, with the academic community carefully scrutinising, criticising and refining each step along the way. Only a few of these advances and debates hit the headlines. The reality is both less dramatic and more interesting.![]()

Toby Cubitt, Reader (Associate Professor) in Quantum Information, UCL

This article is republished from The Conversation under a Creative Commons license. Read the original article.