As humanity continues to implement new AI-solutions into many industries, the question of these projects’ usefulness and ethics is still often debated. Experts emphasize that developers of any new AI-tool must check its for goodness’, be responsible for its safety and consider its potential risk of harm. Still, the question of who should control the creation and use of new AI-technologies, specifically in synthetic media and deepfake tools, remains open. And secondly, is there a risk of making the code of new tools available to the general public?

In this article, I will share my thoughts on why it’s better and safer to bring the new AI tech into the hands of business rather than release it into the wild.

Why the full-body swap technology shouldn’t be open-source

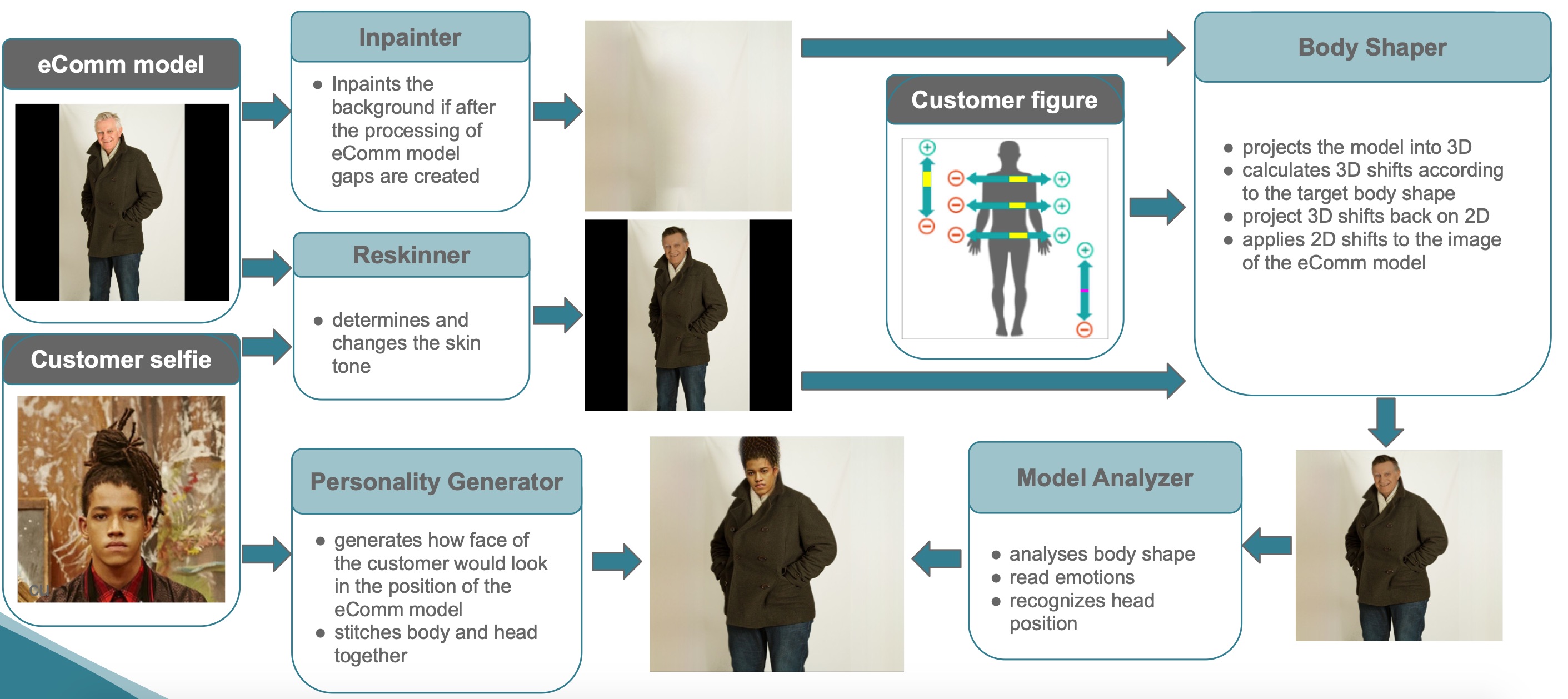

In 2018, I started working on creating a digital fitting room running on machine learning. It required overcoming a couple of new issues that remain unsolved in machine learning till now. There was no available information on how to change the body proportions according to specific parameters, so no one knew how to make bodies appear thicker or slimmer with AI. Even now, this issue is not 100% resolved.

At first sight, what is so dangerous about the technology changing the image of one person to another in a few seconds? This feature underlies all digital fitting rooms and webshops. In 2018, I had no idea my technology would later be called a “full-body swap” or deepfake tool.

Only after I published a demo in August 2020 and different shady organizations started approaching me, I realized that AI-body-swap is a bomb. A bomb in both senses – as a technological breakthrough and as something dangerous if its code gets into the wrong hands.

If the source code of a full-body swap technology leaked (or if I published it myself), it would still hardly become a mass technology. It would more likely go to those who don’t advertise their work on deepfakes – like creators of fake porn and political blackmail materials. Deepfake tech is just too appealing for them not to expropriate and use it with evil intentions.

A team of high-class ML-pros would find a way around my code in about a week. In roughly six months, they’d have a production-grade full-body swap technology. After seeing the feedback and the level of interest in my technology when the demos got published, I realized that the only way to guarantee that it ends up in the right hands was to start collaborating with businesses whose actions are public and regulated by law.

Why is it safer to cooperate with businesses?

Keeping AI and deepfake imagery under control is possible only under the condition of total transparency and public oversight. The difference between business ownership of deepfake technology and uncontrolled public access to it is whether we know who to prosecute in the case of misuse and whether we have the opportunity to provide tools to avoid malicious use.

In public access, the technology is usually used anonymously and without any community rules. While business companies can regulate it at all levels: users filtering, moderation of content, banning systems, and the most important is tracking of their own synthesized content.

Detection of synthesized content is one of the fundamentally critical next steps in developing the whole AI-industry. Businesses that own such tools must provide detectors for identifying them.

What can be deepfake detectors?

For instance, it can be neuro watermarks on videos that act as a watermark on a banknote. The imagery stores certain information (undetectable by a human eye) that proves its AI-synthesis with XXX tech, even if it was compressed, resized, or some of its parts have been cut out.

However, watermarks on video are a very long and resource-intensive technology, and deepfakes spread already now. Neuro watermarks only allow us to know that content was AI-generated but not who had generated it. Who to prosecute in case of fake news spreading? It should be a person, not the company that owns the technology. After all, no one is suing Photoshop for submissive content someone created because everyone understands that Photoshop is just a tool.

Now we at Reface are creating a faster and more convenient alternative to video watermarks. Each synthesized video made with our application will have its own footprint (hash). The user can delete the video from the database, but the hash will be saved anyway.

Therefore, even if someone creates a deepfake, removes it from the platform, and uploads it to YouTube, the company will compare this video’s footprint with that one in the database and lead to a specific user.

Making a model for generating a video hash is easier and faster than patching watermarks. Later, the same footprint detector can be released as an antivirus or browser extension, which warns the user that this web page has video-generated content using neural network + company logo.

The development of AI technology and the search for new ML solutions is impossible to stop now. Any motivated developer/machine learner can create a valid approach with no extra help, given time.

This is why it’s better to offer such solutions to businesses with at least some ethical code than to ride a short wave of hype on Github.

Companies that work with AI and create synthetic media get a lot of backlash from the public and the mass media. However, the companies that honestly admit to using such technologies are also those who play fair. They go out of their way to make it impossible (or at least as hard as possible) to misuse the resulting products. We need to make a pact, a deal that all the companies that create synthetic media must include watermarks, footprints, or provide other detectors for identifying it. Then we will always be able to locate the source of “official” deep fakes.

About the Author

Alexey Chaplygin is VP of Product Delivery at Reface (AI-app to swap faces on videos, GIFs, and images). Alexey is responsible for product development, the implementation of new ML-tools, and synthetic media’s regulated use.

This article is republished from hackernoon.com